Table of Contents

This is a guide for beginners to understand cloud computing. Below is the table of contents to the guide, and on the side are the different links

1. Kernel

We will cover Kernel definition, why Kernel, how it works, and it's importance:

2. Containerization

We will cover Containerization definition, why containerization, how it works, and it's importance:

3. Virtualization

We will cover Virtualization definition, why virtualization, how it works, and it's importance:

4. VMs vs Baremetal

We will cover VMs vs Baremetal definition, and the differences between the two:

5. Containerization vs. Virtualization

We will cover the difference between Containerization and Virtualization definition, strengths, and limitations:

6. WSL (Windows Subsystem for Linux)

We will cover WSL definition, why WSL, how it works, it's importance, installation process:

7. Kubernetes vs Docker

We will cover the differences between Kubernetes vs Docker, and why you would pick one over the other.

8. Docker

We will cover Docker definition, why Docker, how it works, it's importance, installation process:

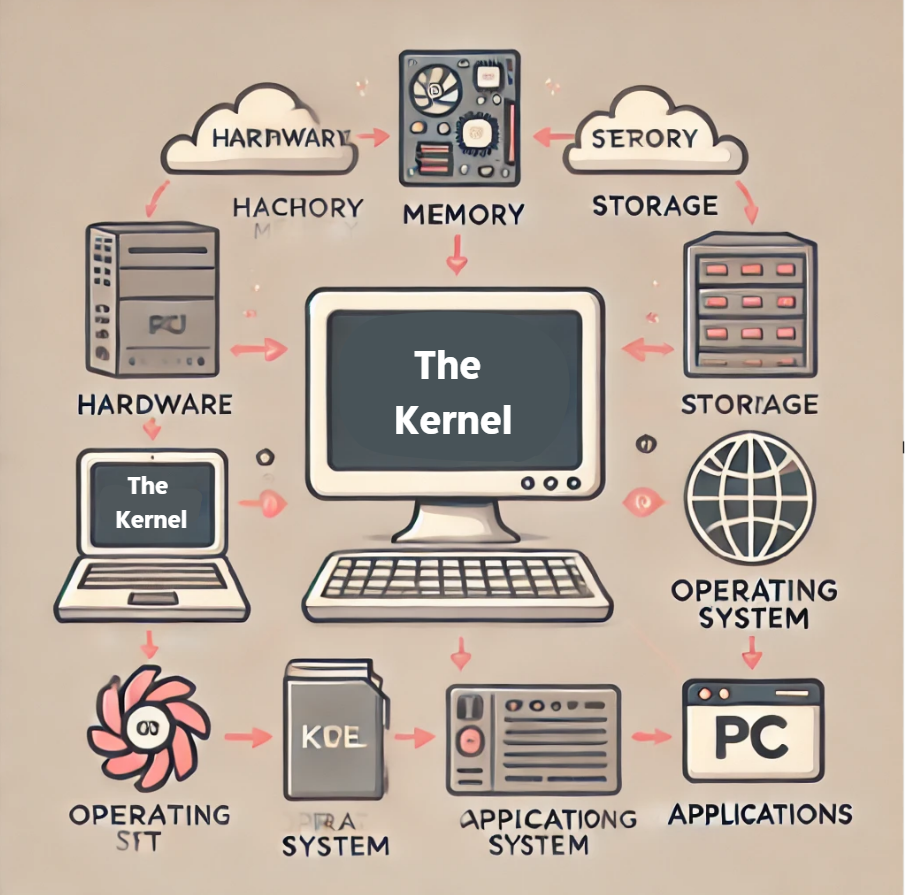

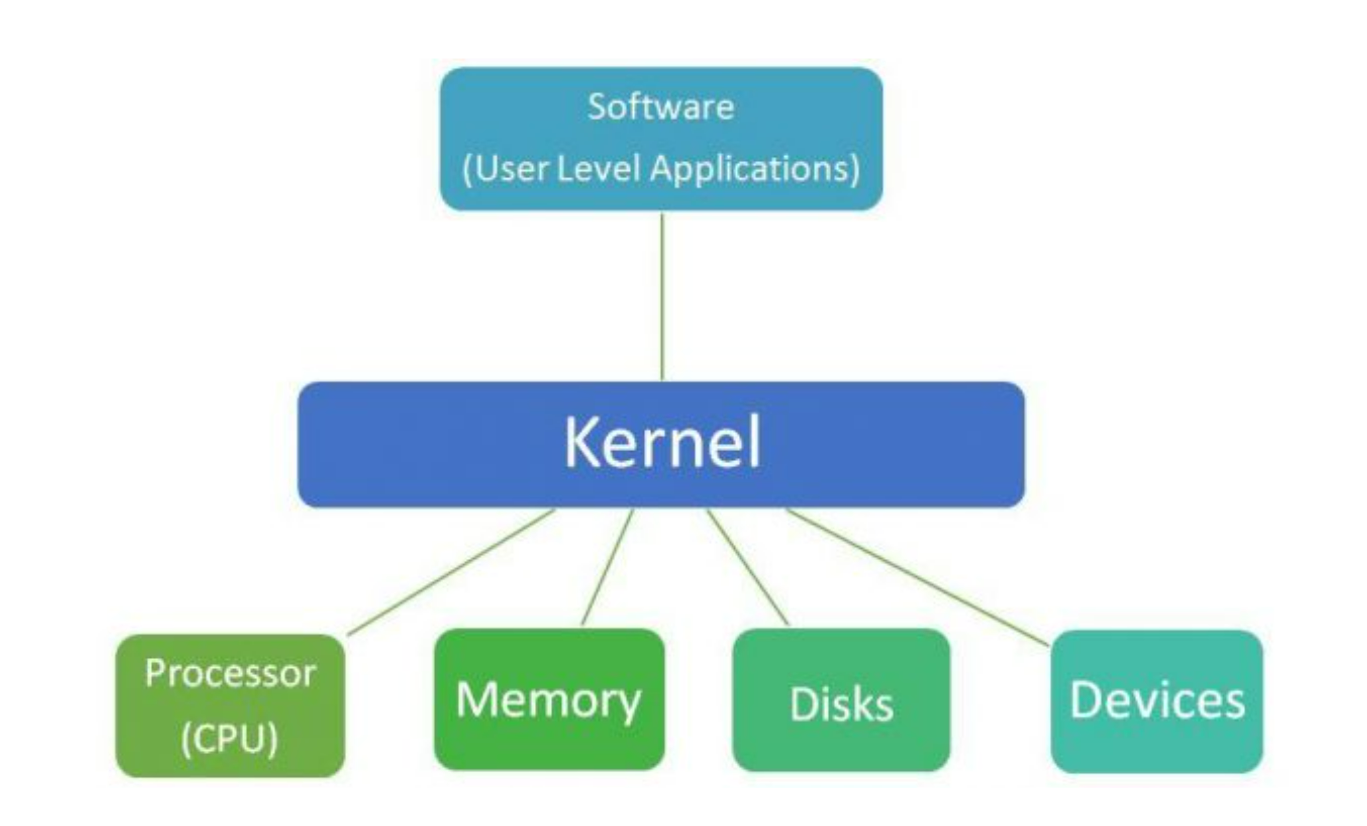

Kernel

Definition

The Kernel is the core part of the operating system that manages system resources and allows communication between software and hardware.

Why Kernel?

The Kernel is responsible for many important tasks such as allocating and managing the system’s memory. It manages the device by handling the communication between the system and hardware devices. It manages the file system by managing data storage and retreival from file systems. Lastly, it manages all the processes and thread: including theier creation, execution and termination.

Importance.

Without the Kernel, a system would be chaotic, insecure and insufficient. The Kernel provides is the central control allowing the entire system to function properly and it brings system effficiency by not allowing the system to become unresponsive or slow down. Also, the kernel provides security by ensuring overall stability/protecting the system from any unauthorized access and the kernel simplifies hardware interaction which enables compatibility where that brings ease of use for any developer.

How it Works

Manages Hardware: The kernel translates requests from applications into actions that the hardware can understand by becoming a bride between hardware and applications.

Handle Processes: The kernel controls the execution of processes, processes the isolation between it and ensures CPU usage.

Controls Memory: The kernel manages how memory is allocated, used, and swapped between applications to ensure smooth operation.

Facilitates Communication: Through system calls and APIs, it allows user applications to request resources and interact with the system.

Maintains Security: The kernel enforces system security through access control, process isolation, and user authentication.

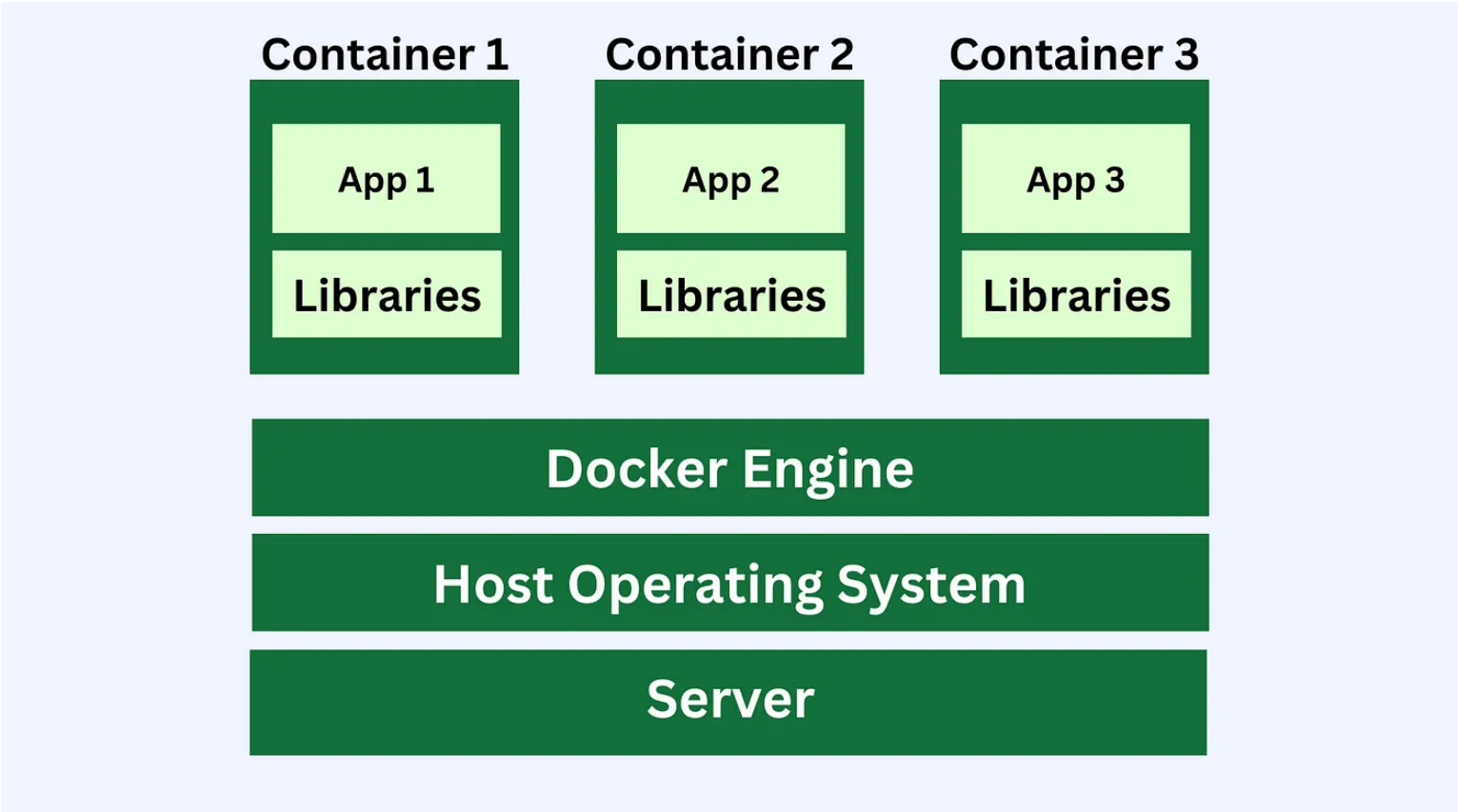

Containerization

Definition

Containerization is a method of packaging software into a single “container.”

This container can run consistently on any computing environment, whether it’s a developer’s laptop,

a server, or a cloud platform.

Why Containerization?

Containerization eliminates issues where software works on one machine but not another

because of differences in environments. Containerization bundles the software and all

its dependencies into a portable container.

Importance

Containers provide a consistent environment for software applications,

making it easier to develop, test, and deploy across different platforms.

How it Works

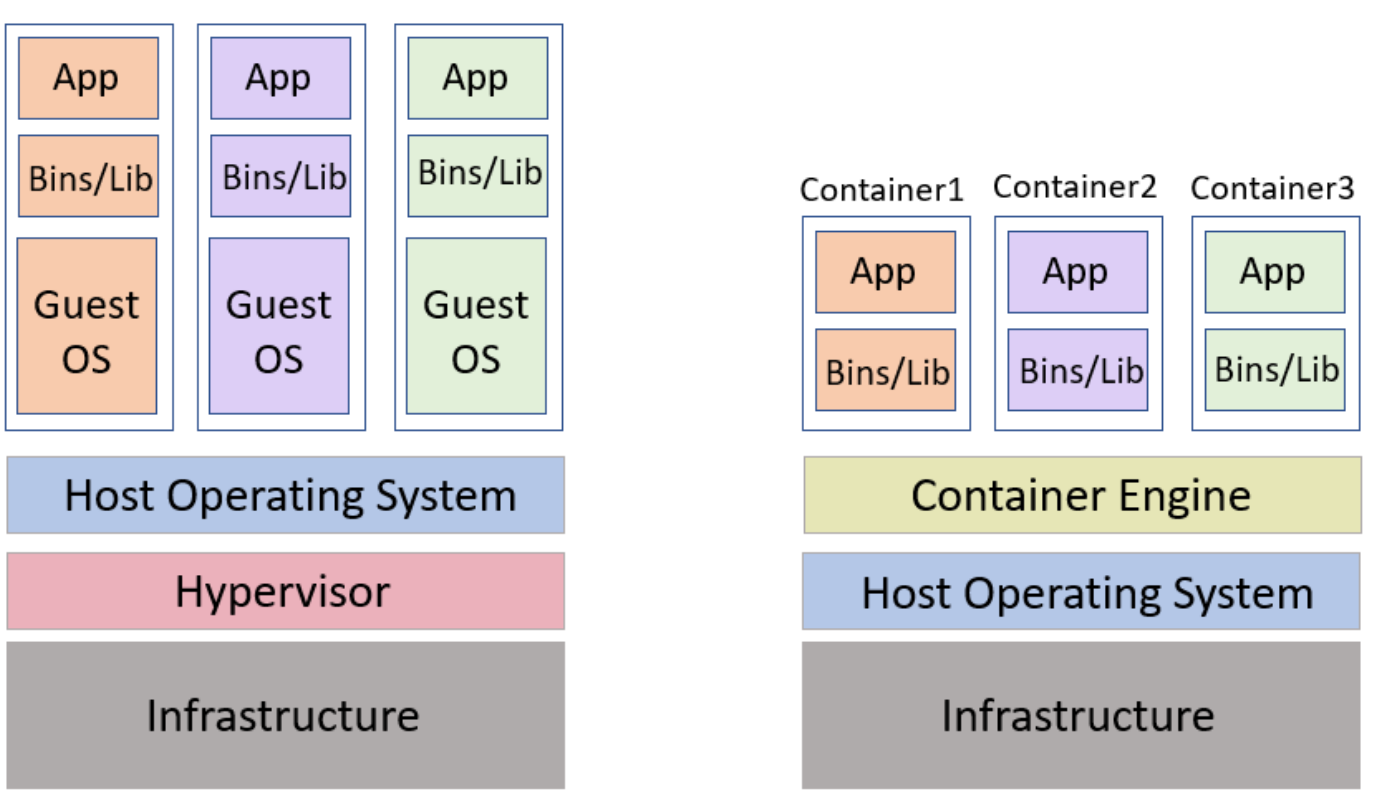

Containers enable the creation of isolated environments for each application by utilizing the system’s operating system (OS). Unlike virtual machines (VMs), which require a full OS for each instance, containers share the host’s OS, making them lightweight and faster to start. This form of virtualization allows multiple applications to run in isolated “containers” on the same computer. Since containers share the host’s OS (e.g., Windows, macOS, or Linux), they use less storage and memory, offering fast startup times while maintaining application isolation.

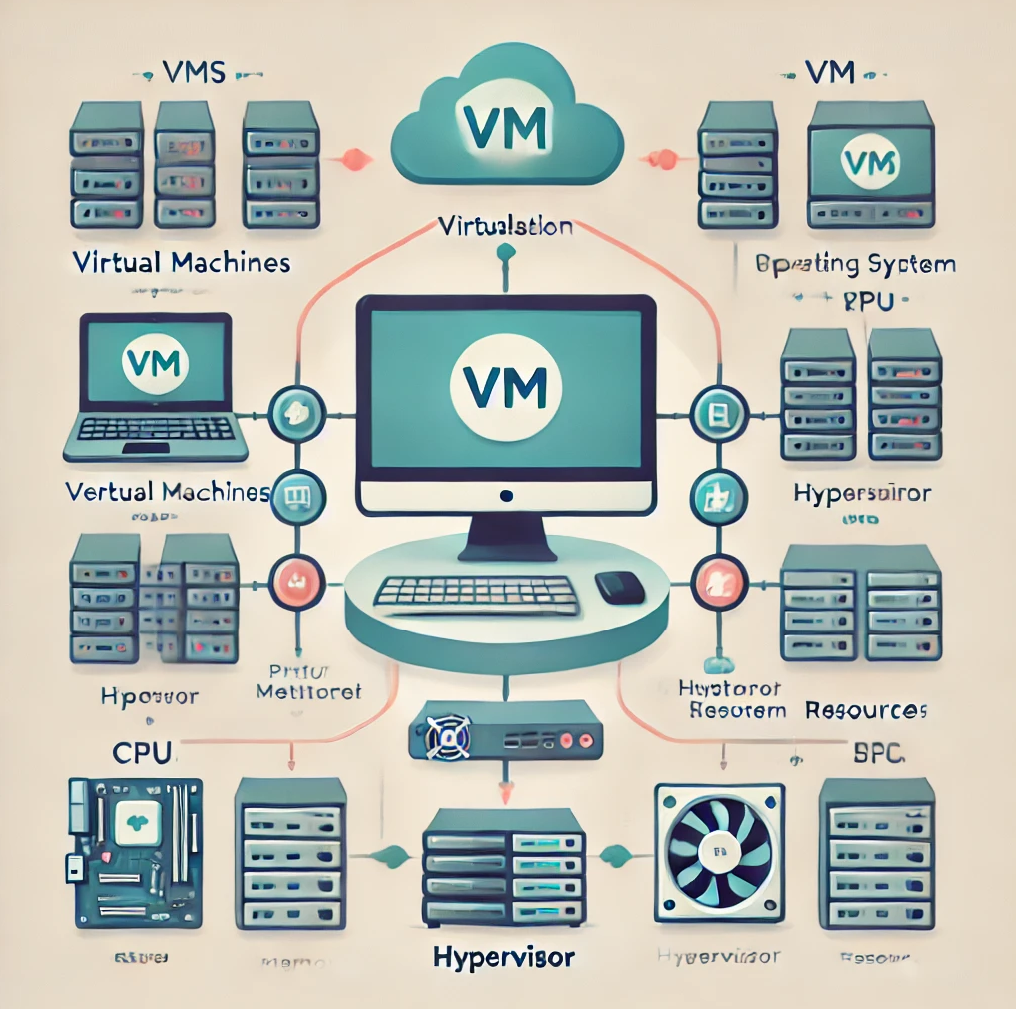

Virtualization

Definition

Virtualization is the process of creating virtual instances of physical hardware such as servers, storage devices, or network resources.

Why Virtualization?

Virtualization allows for one physical machine to fully maximize its resources by creating numerous virtual machines. This allows for horizontal scaling by spinning up more VMs across multiple servers to handle increasing demand.Importance

Virtualization provides flexibility and efficiency in managing IT resources. This allows businesses to save money by reducing hardware costs and getting the most value out of it's resources.How it Works

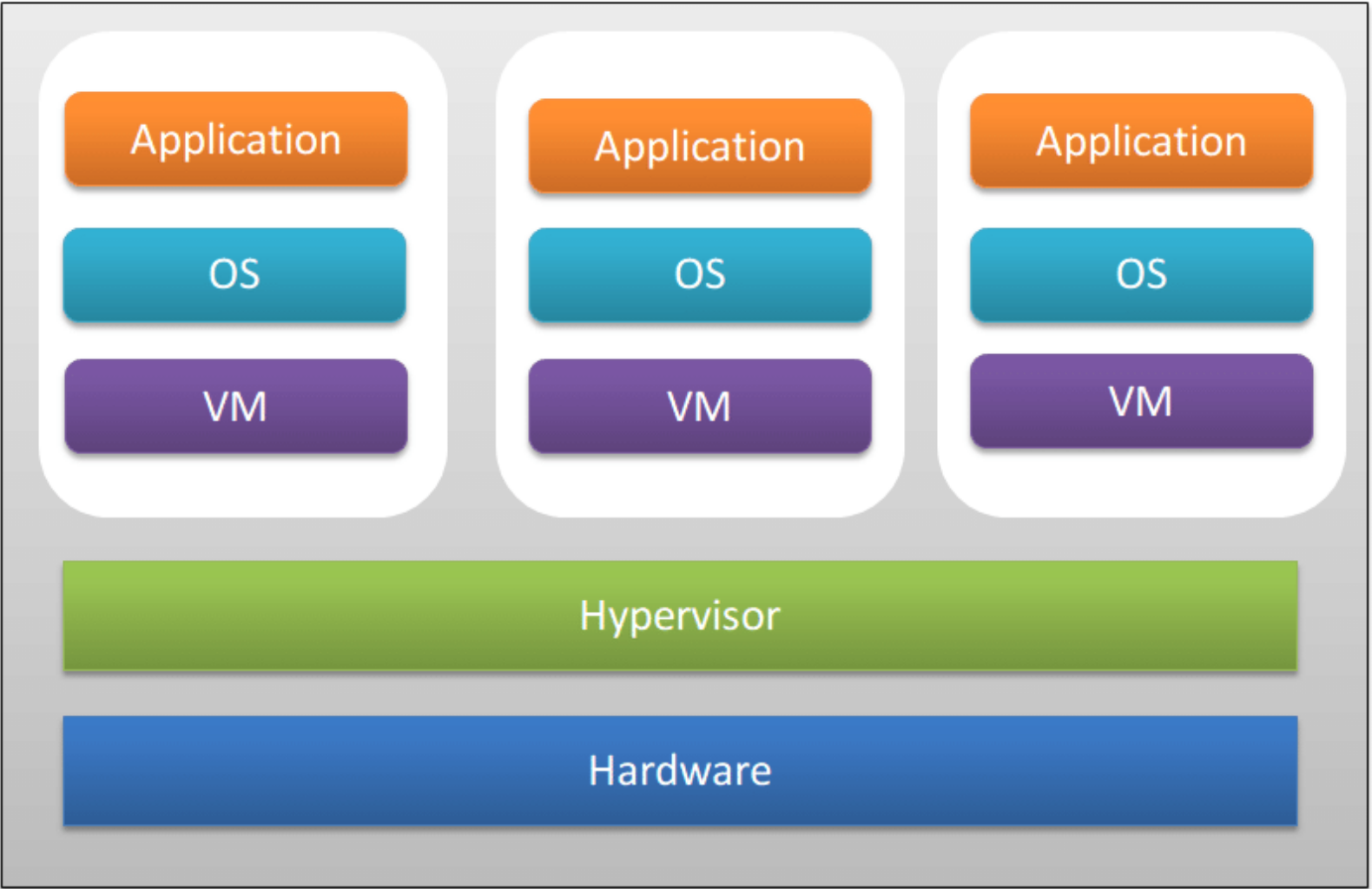

Virtualization works by using a hypervisor, which is software that sits between the physical hardware and the virtual machines (VMs). A VM operates like an independent computer with its own OS and applications, separate from the host's OS. Unlike containers, VMs require a full OS for each instance, which makes them more resource-intensive. This setup provides strong isolation between environments, allowing multiple operating systems to run on the same physical machine. However, because each VM runs a complete OS, VMs are heavier (using more storage and memory) and slower to start compared to containers.Baremetal vs VMs

Summary

When it comes to hosting servers or other computing resources—such as database servers, OS simulations, and development/testing tools—two common approaches are using Bare Metal servers or Virtual Machines (VMs). Both methods offer distinct advantages and serve different purposes depending on the specific requirements of the workload.How Bare Metal Works

Bare metal servers run directly on physical hardware, with no virtualization layer. The operating system installed on the server has full control over the hardware, and all resources are dedicated to the single instance running on that server.Pros

Maximum Performance: Without the hypervisor overhead, bare metal servers can fully utilize all hardware resources.

Low Latency: Ideal for applications requiring real-time processing or high-speed data access since there’s no layer of abstraction between the hardware and software.

Full Control: You have direct access to the hardware, allowing for deep customization and tuning.

Cons

Lack of Flexibility: You can only run one operating system per physical server. If you need to run multiple environments, you need multiple servers.

Scalability Issues: Scaling requires the addition of more physical servers, which can be more complex and costly compared to creating new VMs.

Underutilization Risk: If the workload doesn’t fully use the hardware, you’re paying for resources that aren’t being used.

How Virtual Machines Work

VMs run on a hypervisor (type1- baremetal hypervisor or type2- hosted hypervisor), which is a software layer that sits on top of physical hardware and divides the hardware resources (CPU, memory, storage, etc.) among multiple virtual machines. Each VM can run its own operating system and applications independently, as though it were on a separate physical machine.Pros

Resource Sharing: Multiple VMs share the same physical server, leading to better hardware utilization.

Isolation: Each VM is isolated, meaning one VM can fail without affecting others.

Flexibility: Easily create, modify, and delete VMs to meet workload demands.

Cost Efficiency: VMs allow multiple workloads to run on the same physical hardware, reducing the need for multiple servers.

Cons

Performance Overhead: The hypervisor introduces a slight performance hit since it mediates between the VMs and the physical hardware.

Resource Contention: If too many VMs share the same physical resources, performance can degrade.

When to use each

Bare Metal

You need the best performance possible for demanding workloads.

You have specific hardware requirements or need deep customization.

Latency and processing speed are critical to the application's performance.

Virtual Machines

You need to run multiple operating systems or applications on the same server.

You want flexibility, scalability, and better resource management.

You prioritize ease of management and deployment (especially in cloud environments).

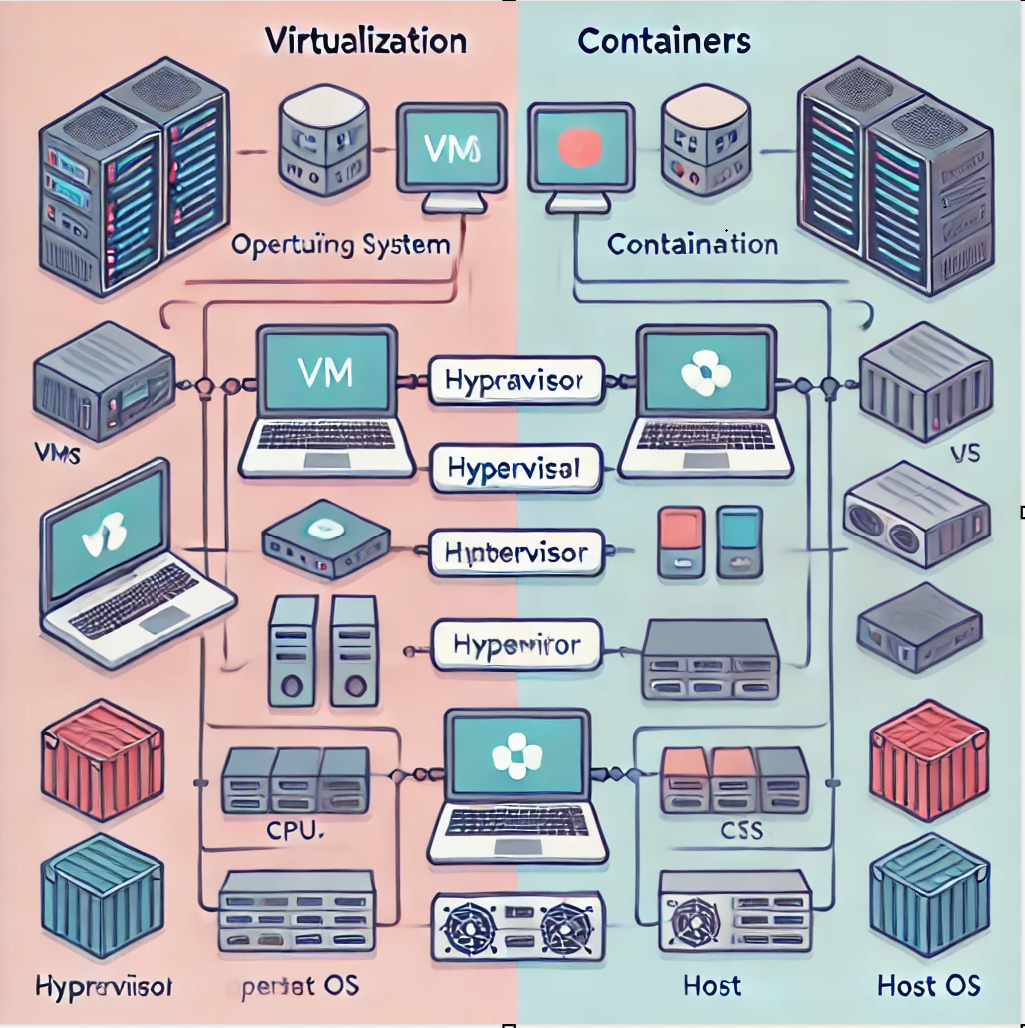

Containerization vs Virtualization

As we could learn from previous readings:

Virtualization helps us to create virtual versions of a computer resource such as devices, storage, networks, servers, or even applications.

It allows organizations to partition a single physical computer or server into several virtual machines (VM).

Each VM can then interact independently and run different operating systems or applications while sharing the resources of a single computer.

Some advantages of Virtualization are:

- Enhanced performance

- Promotes the use of resources in an optimum manner

- Space saving

and Containerization:

and Containerization:

Containerization is a lightweight alternative to virtualization. This involves encapsulating an application in a container with its own operating environment.

Thus, instead of installing an OS for each virtual machine, containers use the host OS. Since they don’t use a hypervisor, you can enjoy faster resource provisioning.

Some advantages of Containerization are:

- Containers share the machine’s operating system Kernel. They do not require to associate the operating system from within the application hence they are lightweight.

- It takes less start-up time in the environment where it is deployed.

- It’s ideal for automation and DevOps pipelines, including continuous integration and continuous deployment (CI/CD) implementation.

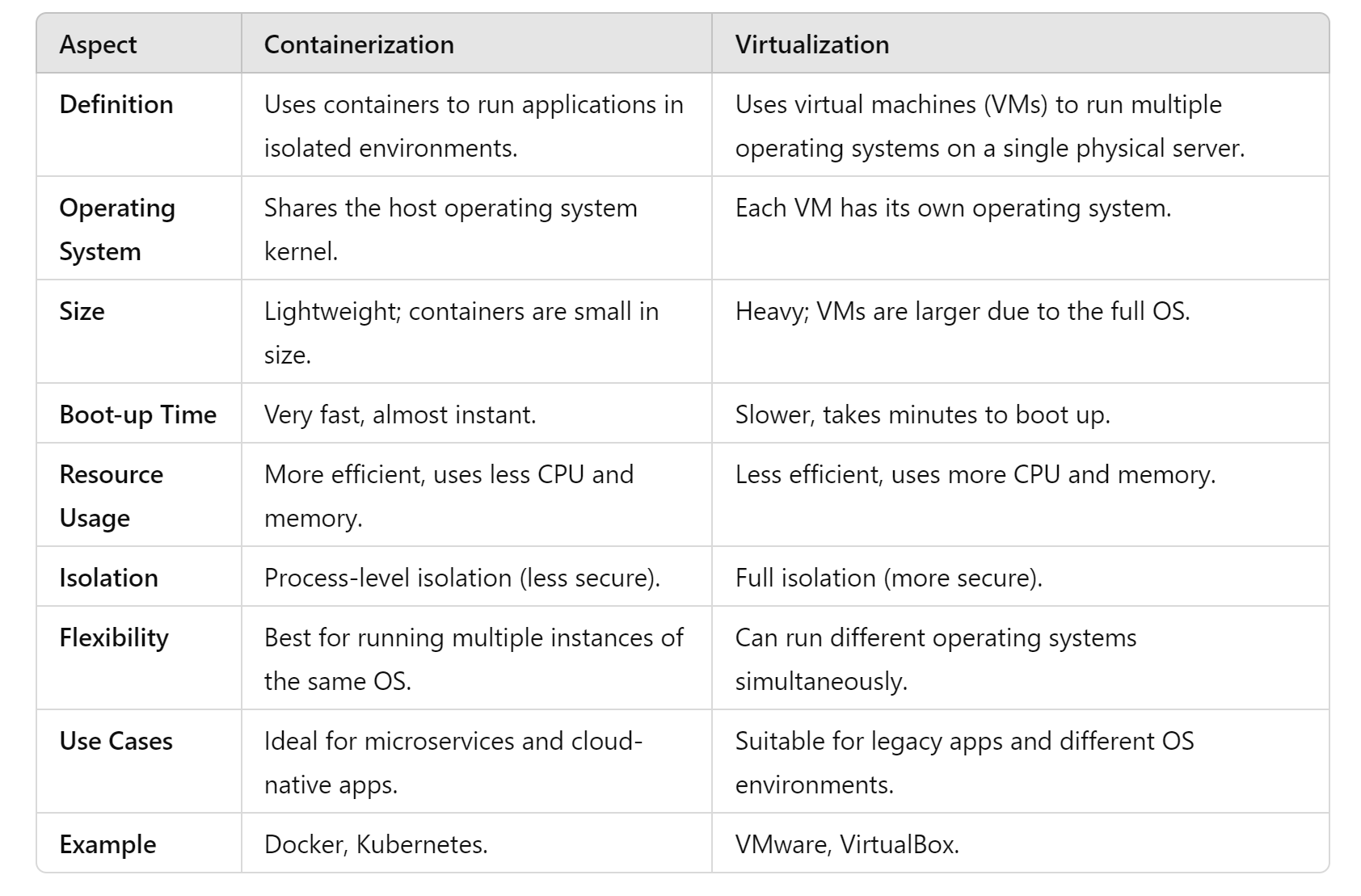

Some of the differences between containerization and virtualization are:

WSL

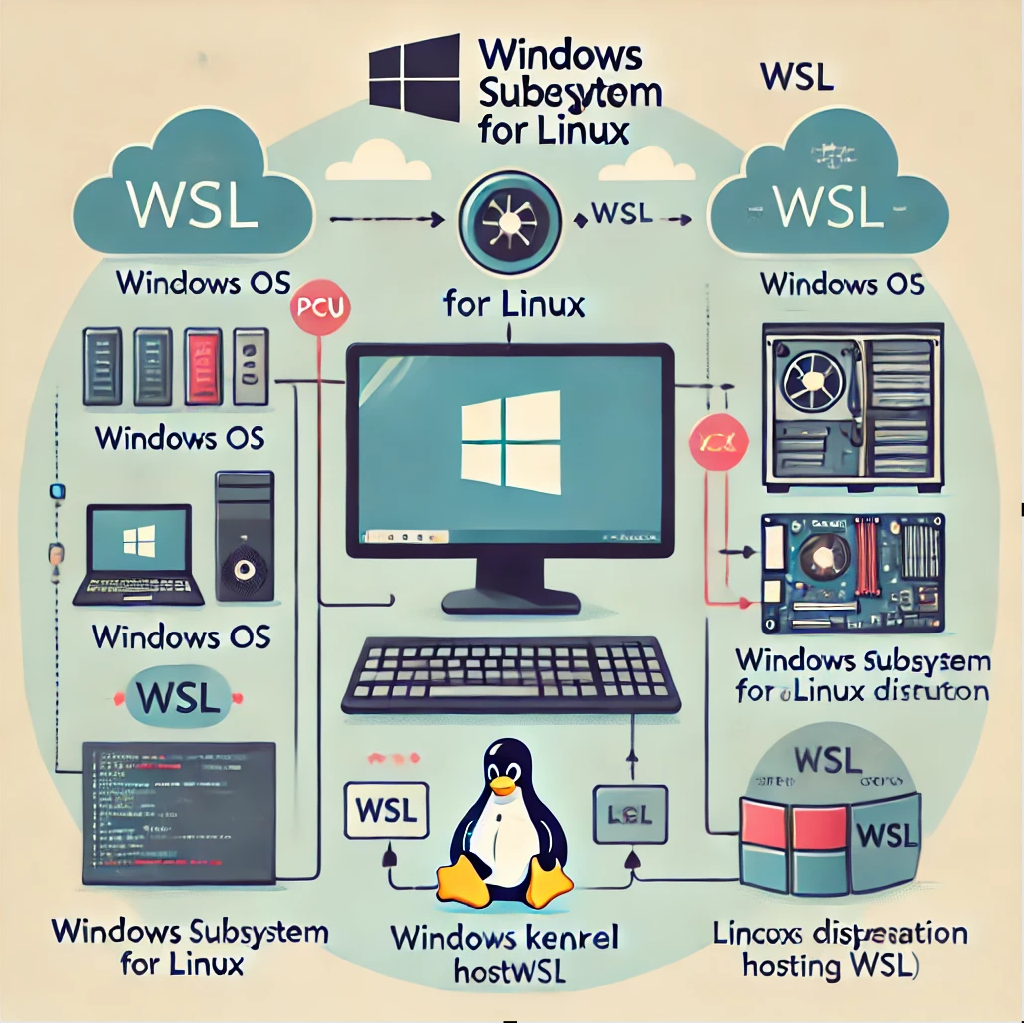

Definition

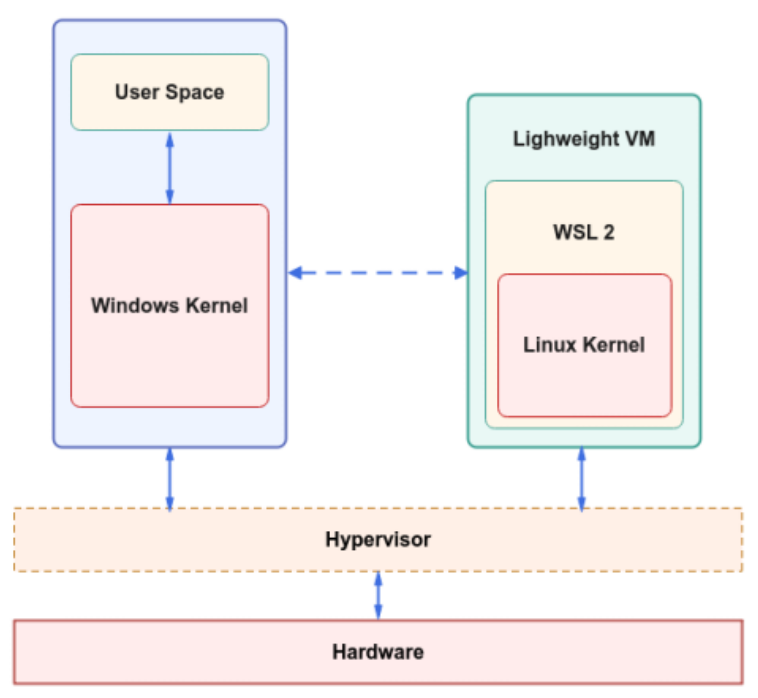

WSL is a tool that lets you run a full Linux environment directly on your Windows computer. It’s like having both Windows and Linux side-by-side without needing to switch between them. You can use Linux commands, run Linux software, and work with files in both systems easily. Think of WSL as a bridge that allows Windows to use Linux features without needing a separate Linux installation.

How does WSL work?

WSL creates a lightweight "virtual machine" inside your Windows system. It lets Windows and Linux work together smoothly by:- Running Linux software: You can use Linux commands, tools, and even programming environments just as you would on a real Linux machine.

- Sharing files: You can access your Windows files from within the Linux environment and vice versa. This means you can work with the same files on both systems seamlessly.

- Integrated terminal: WSL comes with a terminal (a command-line interface) that feels like Linux but runs inside Windows.

Why is WSL important?

WSL is a big deal for several reasons:- Easy access to Linux commands: You don’t need to install a separate Linux machine or deal with complicated setups to access Linux commands and software.

- Lightweight: It doesn’t require much memory or storage compared to running a full virtual machine. It’s fast and efficient.

Installation Process:

Installing WSL on Windows

Enable WSL:

Open PowerShell (search for it in the start menu, right-click, and run as administrator).

Type this command and press Enter: wsl –install. This installs WSL and sets up a Linux distribution (usually Ubuntu by default).Restart your computer: Once the installation finishes, restart your computer.

Choose a Linux distribution:

After restarting, WSL will ask you to choose a Linux distribution (like Ubuntu, Debian, etc.).

Pick one that works best for you (Ubuntu is a popular choice).Set up your Linux environment:

Once the installation is complete, open the Linux terminal by searching for “Ubuntu” (or the Linux distro you chose).

You’ll be asked to create a username and password for your Linux environment.Start using Linux commands: You’re now ready to use Linux on Windows! Open the terminal and start running Linux commands.

For Mac Users:

Mac doesn’t support WSL because it’s a Windows feature. However, Mac already comes with a Unix-based system, which is quite similar to Linux. So, you don't need WSL on a Mac. Instead, you can directly use the Terminal app on your Mac to run Unix-like commands.But, if you specifically want to run a Linux environment on a Mac, here are some options:

- Use Homebrew: This is a package manager for macOS, which can help you install Linux-like tools and packages.

- Use a virtual machine: You can install software like VirtualBox or VMware to run a full Linux distribution in a virtual machine on your Mac.

Kubernetes vs Docker

As we could learn from previous readings:

Kubernetes helps us developers manage complex distibuted systems by asbtracting the underlying infrastructure. It automates the management, scheudling and coordination of containers across a cluster of machines which allows a user to run applications efficiently withothout about the underlying infrastructure. Kubernetes also makes it easy to scale applications up or down based on demand, as this means you can scale the number of containers running an application automatically.

Some advantages of Kubernetes are:

- Portability and Flexibility

- Efficient Resource Utilization

- Rolling Updates and Rollbacks

and Docker

and Docker

Docker helps us by allowing user to package an application and all of its dependencies into a standerized unit. It ensures that the applications run the same regardless of where they are deployed which eliminates a common of issue “it works on my machine” since the environment is consistent across all stages.

Some advantages of Docker are:

- Isolation

- Consistency Across Environments

- Lighweight and Fast

Docker

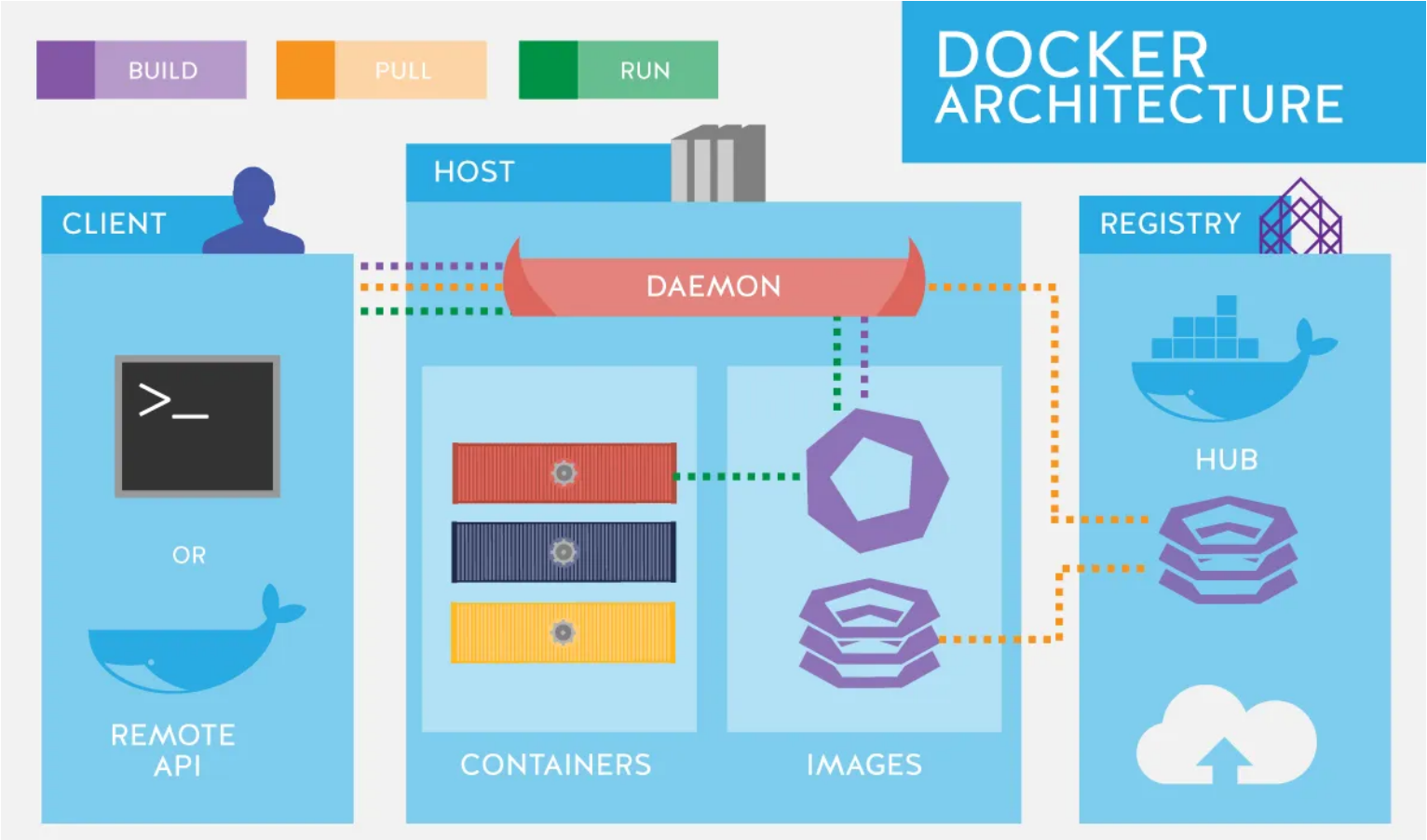

Docker is an open source tool that helps developers build, run, and package an application (and all the things it needs like libraries, code, and settings) using software packages known as “containers.” Docker can allow developers to ship code quickly, standardize application operations, improve resource utilization, simplify workloads, and stay ahead of security issues.

Installing Docker

Windows

1.) Download Docker Desktop from official website: https://www.docker.com/2.) Run the Installer: Open downloaded exe file and follow insallation process.

3.) Enable WSL 2: Use the commands "wsl --install" and "wsl --set-default-version 2" in terminal

4.) Launch Docker.

Mac

1.) Download Docker Desktop from official website: https://www.docker.com/2.) Run the installer: Open .dmg file that was downloaded and drag icon into applications folder.

3.) Launch Docker.